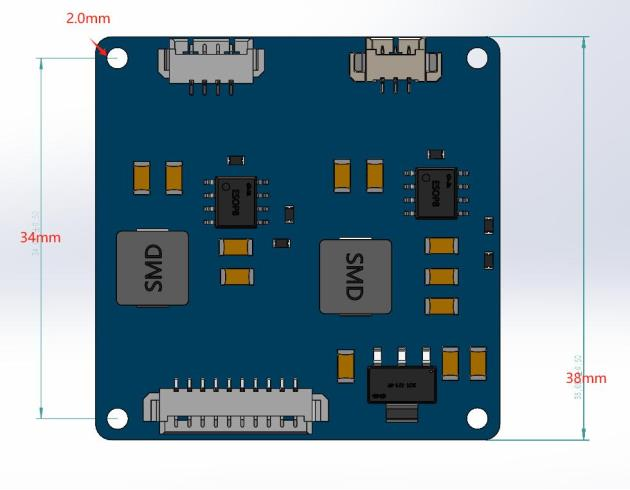

Drone AI Intelligent Tracking Module

Technical Analysis of Drone AI Tracking Module

1. Introduction

In high-speed flight, drones rely on sensors and camera systems to capture environmental information, and the key to tracking a target is obtaining its position in real-time from the video stream. Achieving precise target capture and tracking is a complex and challenging task. One of the core technologies in the Drone AI Tracking Module is Optical Flow, which calculates the motion of pixels between consecutive frames to track the target.

2. Optical Flow

Optical Flow is a motion estimation algorithm based on changes in pixel intensity between sequential images. By analyzing pixel intensity changes between two consecutive frames, it estimates the movement of an object in the image. Optical Flow operates under two core assumptions:

- Brightness constancy assumption: The brightness of an object remains constant over a short period of time.

- Small motion assumption: The object moves only slightly between consecutive frames, making pixel movement easier to compute.

The fundamental idea of Optical Flow is to infer the movement direction and speed by comparing the intensity differences at the same positions in consecutive frames. This method is particularly effective in tracking fast-moving targets. Common Optical Flow algorithms include Lucas-Kanade Optical Flow (focusing on local motion estimation) and Horn-Schunck Optical Flow (which uses global smoothness constraints to solve the flow field).

3. Challenges and Limitations

Our engineers have deeply optimized the Optical Flow algorithm in the drone AI tracking module to deliver accurate target tracking even in complex, high-speed flight environments. However, Optical Flow still faces several challenges in real-world applications:

- Sensitivity to lighting changes: Since Optical Flow relies on the brightness constancy assumption, real-world lighting conditions frequently change (e.g., shadows shifting, sunlight reflections), which can lead to inaccurate motion estimation.

- Occlusion issues: When the target is obscured by other objects, Optical Flow may fail due to the lack of accurate visual information, preventing the tracker from correctly identifying the target’s motion path.

4. Solutions

(1) Application of Motion Models

During Optical Flow estimation, motion models can be used to predict an object’s trajectory. Using physical models such as Kalman filtering and Particle filtering, the system can model the movement of the target. These models help predict the target’s potential position when the target is occluded or lighting conditions change, reducing the likelihood of Optical Flow failure.

(2) Integration of Deep Learning Algorithms

We have incorporated deep learning algorithms into Optical Flow estimation. Convolutional Neural Networks (CNNs) can automatically learn critical features from images and optimize the tracking process using temporal context, especially under varying lighting conditions or target occlusion. This significantly improves the robustness and accuracy of Optical Flow, enabling drones to efficiently track targets in more complex environments.

Other Improvements

In addition to the above solutions, our engineers have further enhanced the drone AI tracking module by integrating multi-channel data, adaptive Optical Flow algorithms, and leveraging cutting-edge AI technologies.

Conclusion

Although the design of the drone AI tracking module may appear simple, it involves the integration of multiple complex technologies. From the foundational Optical Flow algorithm to the application of motion models, deep learning, multi-channel data fusion, and adaptive algorithms, each technology is aimed at improving the drone’s tracking capability in dynamic and complex environments. With these optimizations and integrations, the drone can maintain high accuracy and stability in tracking, even under challenging conditions such as lighting changes and target occlusion.

Products Showcase